维基数据:数据访问

維基數據目前包含超過1億筆項目以及超過65萬個詞彙,而且,這些數字還會不斷地增長。這裏有很多可用的方法得以访问這些数据—本文將它們展示出來而且協助未來的用户選擇最好的方法去合適他們的需求。

It's crucial to choose an access method that gives you the data you need in the quickest, most efficient way while not putting unnecessary load on Wikidata; this page is here to help you do just that.

在您开始之前

使用维基数据中的数据

维基数据提供了大量关于世界上一切可能之事的通用数据。在公眾領域,所有的数据皆在CC0下被授权,「沒有任何權力被保留」。

对API和其他访问维基数据的方法的改变,需要遵守稳定的接口政策。本页面上的数据源不保证是稳定的接口。

維基媒體項目

This document is about accessing data from outside Wikimedia projects. If you need to present data from Wikidata in another Wikimedia project, where you can employ parser functions, Lua and/or other internal-only methods, refer to How to use data on Wikimedia projects.

Data best practices

维基数据免费为您提供维基数据,不要求您在CC-0下进行归属。做為回報,如果您能,在您的项目裏,提及维基数据是您的数据来源,我们将非常感激。

这将使我们能够确保该项目能够长期存在,并为您提供最新的高质量数据。我们也将推广那些使用维基数据的最佳项目。

Some examples for attributing Wikidata: "Powered by Wikidata", "Powered by Wikidata data", "Powered by the magic of Wikidata", "Using Wikidata data", "With data from Wikidata", "Data from Wikidata", "Source: Wikidata", "Including data from Wikidata" and so forth. You can also use one of our ready-made files.

您可以使用上述的维基数据的标识,但在您這麼做的時候,您不应以任何方式暗示得到维基数据或维基媒体基金会的认可。

Access best practices

When accessing Wikidata's data, observe the following best practices:

- Follow the User-Agent policy – send a good User-Agent header.

- Follow the robot policy: send

Accept-Encoding: gzip,deflateand don’t make too many requests at once. - If you get a 429 Too Many Requests response, stop sending further requests for a while (see the Retry-After response header)

- When available (such as with the Wikidata Query Service), set the lowest timeout that makes sense for your data.

- When using the MediaWiki Action API, make liberal use of the

maxlagparameter and consult the rest of the guidelines laid out in API:Etiquette.

搜索

这是什么?

Wikidata offers an Elasticsearch index for traditional searches through its data: Special:Search

什么时候使用?

Use search when you need to look for a text string, or when you know the names of the entities you're looking for but not the exact entities themselves. It's also suitable for cases in which you can specify your search based on some very simple relations in the data.

Don't use search when the relations in your data are better described as complex.

详情

You can make your search more powerful with these additional keywords specific to Wikidata: haswbstatement, inlabel, wbstatementquantity, hasdescription, haslabel. This search functionality is documented on the CirrusSearch extension page. It also has its own API action.

Linked Data Interface (URI)

这是什么?

The Linked Data Interface provides access to individual entities via URI: http://www.wikidata.org/entity/Q???

什么时候使用?

Use the Linked Data Interface when you need to obtain individual, complete entities that are already known to you.

Don't use it when you're not clear on which entities you need – first try searching or querying. It's also not suitable for requesting large quantities of data.

详情

每个项目或属性都有一个持久的URI由维基数据概念命名空间、和該项目或属性ID(如Q42和P31)以及能被项目或属性的数据URL所访问的具體的数据,所共同組成。

The namespace for Wikidata's data about entities is https://wikidata.org/wiki/Special:EntityData.

将一个实体的ID附加到这个前缀(簡稱/entity/)上,就形成了该实体的数据URL的抽象(格式中立)形式。当访问一個在Special:EntityData命名空間的資源时,這個特别页面会应用内容协商来确定维基数据的输出格式。當你在一个普通的Web浏览器中打开了这个URL,你會看到一个包含有关于该实体的数据的HTML页面,因为Web浏览器偏愛HTML。然而,一個連接数据的客户端会收到实体的数据,以JSON或RDF的格式,这取决于客户端在HTTP Accept:標头中的指定。

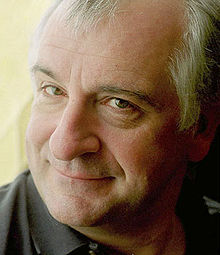

- For example, take this concept URI for Douglas Adams – that's a reference to the real-world person, not to Wikidata's concrete description:

http://www.wikidata.org/entity/Q42

- As a human being with eyes and a browser, you will likely want to access data about Douglas Adams by using the concept URI as a URL. Doing so triggers an HTTP redirect and forwards the client to the data URL that contains Wikidata's data about Douglas Adams: https://www.wikidata.org/wiki/Special:EntityData/Q42.

當你需要繞過内容协商,這麼說吧,是為了在网络浏览器中查看非HTML内容,您也可以通过在数据URL中扩展后缀来访问特定格式的实体数据,以表明您想要的内容格式;例子包含.json、.rdf、.ttl、.nt、.jsonld。例如:https://www.wikidata.org/wiki/Special:EntityData/Q42.json以JSON格式給你項目Q42。

更简要的RDF输出

By default, the RDF data that the Linked Data interface returns is meant to be complete in itself, so it includes descriptions of other entities it refers to. If you want to exclude that information, you can append the query parameter ?flavor=dump to the URL(s) you request.

By appending &flavor to the URL, you can control exactly what kind of data gets returned.

?flavor=dump: Excludes descriptions of entities referred to in the data.?flavor=simple: Provides only truthy statements (best-ranked statements without qualifiers or references), along with sitelinks and version information.?flavor=full(default): An argument of "full" returns all data. (You don't need to specify this because it's the default.)

If you want a deeper insight into exactly what each option entails, you can take a peek into the source code.

版本与缓存

You can request specific revisions of an entity with the revision query parameter: https://www.wikidata.org/wiki/Special:EntityData/Q42.json?revision=112.

The following URL formats are used by the user interface and by the query service updater, respectively, so if you use one of the same URL formats there’s a good chance you’ll get faster (cached) responses:

- https://www.wikidata.org/wiki/Special:EntityData/Q42.json?revision=1600533266 (JSON)

- https://www.wikidata.org/wiki/Special:EntityData/Q42.ttl?flavor=dump&revision=1600533266 (RDF,没有其他实体的描述)

维基数据查询服务

这是什么?

The Wikidata Query Service (WDQS) is Wikidata's own SPARQL endpoint. It returns the results of queries made in the SPARQL query language: https://query.wikidata.org

什么时候使用?

Use WDQS when you know only the characteristics of your desired data.

Don't use WDQS for performing text or fuzzy search – FILTER(REGEX(...)) is an antipattern. (Use search in such cases.)

WDQS is also not suitable when your desired data is likely to be large, a substantial percentage of all Wikidata's data. (Consider using a dump in such cases.)

详情

您可以通过我们的SPARQL端点[维基数据查询服务]查询维基数据的数据。该服务既可以作为一个交互式的网页界面使用,也可以通过提交GET或POST的请求到https://query.wikidata.org/sparql进行编程访问。

The query service is best used when your intended result set is scoped narrowly, i.e., when you have a query you're pretty sure already specifies your resulting data set accurately. If your idea of the result set is less well defined, then the kind of work you'll be doing against the query service will more resemble a search; frequently you'll first need to do this kind of search-related work to sharpen up your query. See the Search section.

Linked Data Fragments endpoint

这是什么?

The Linked Data Fragments (LDF) endpoint is a more experimental method of accessing Wikidata's data by specifying patterns in triples: https://query.wikidata.org/bigdata/ldf. Computation occurs primarily on the client side.

什么时候使用?

Use the LDF endpoint when you can define the data you're looking for using triple patterns, and when your result set is likely to be fairly large. The endpoint is good to use when you have significant computational power at your disposal.

Since it's experimental, don't use the LDF endpoint if you need an absolutely stable endpoint or a rigorously complete result set. And as mentioned before, only use it if you have sufficient computational power, as the LDF endpoint offloads computation to the client side.

详情

If you have partial information about what you're looking for, such as when you have two out of three components of your triple(s), you may find what you're looking for by using the Linked Data Fragments interface at https://query.wikidata.org/bigdata/ldf. See the user manual and community pages for more information.

Wikibase REST API

这是什么?

The Wikibase REST API is an OpenAPI-based interface that allows users to interact with, retrieve and edit items and statements on Wikibase instances – including of course Wikidata: Wikidata REST API

什么时候使用?

The Wikibase REST API is still under development, but for Wikidata it's intended to functionally replace the Action API as it's a dedicated interface made just for Wikibase/Wikidata.

The use cases for the Action API apply to the Wikibase REST API as well. Use it when your work involves:

- 编辑维基数据

- Getting direct data about entities themselves

Don't use the Wikibase REST API when your result set is likely to be large. (Consider using a dump in such cases.)

It's better not to use the Wikibase REST API when you'll need to further narrow the result of your API request. In such cases it's better to frame your work as a search (for Elasticsearch) or a query (for WDQS).

详情

The Wikibase REST API has OpenAPI documentation using Swagger. You can also review the developer documentation.

MediaWiki Action API

这是什么?

The Wikidata API is MediaWiki's own Action API, extended to include some Wikibase-specific actions: https://wikidata.org/w/api.php

什么时候使用?

当你的任务需要这些时使用API:

- 编辑维基数据

- Getting data about entities themselves such as their revision history

- Getting all of the data of an entity in JSON format, in small groups of entities (up to 50 entities per request).

Don't use the API when your result set is likely to be large. (Consider using a dump in such cases.)

The API is also poorly suited to situations in which you want to request the current state of entities in JSON. (For such cases consider using the Linked Data Interface, which is likelier to provide faster responses.)

Finally, it's probably a bad idea to use the API when you'll need to further narrow the result of your API request. In such cases it's better to frame your work as a search (for Elasticsearch) or a query (for WDQS).

详情

The MediaWiki Action API used for Wikidata is meticulously documented on Wikidata's API page. You can explore and experiment with it using the API Sandbox.

機器人

您也可以用机器人访问API。更多關於机器人,請参见Wikidata:Bots。

Recent Changes stream

这是什么?

最近的事件流提供了所有维基媒体的维基项目的一個连续事件流,包括维基数据: https://stream.wikimedia.org

什么时候使用?

Use the Recent Changes stream when your project requires you to react to changes in real time or when you need all the latest changes coming from Wikidata – for example, when running your own query service.

详情

The Recent Changes stream contains all updates from all wikis using the server-sent events protocol. You'll need to filter Wikidata's updates out on the client side.

You can find the web interface at stream.wikimedia.org and read all about it on the EventStreams page.

转储

这是什么?

Wikidata dumps are complete exports of all the Entities in Wikidata: https://dumps.wikimedia.org

什么时候使用?

Use a dump when your result set is likely to be very large. You'll also find a dump important when setting up your own query service.

Don't use a dump if you need current data: the dumps take a very long time to export and even longer to sync to your own query service. Dumps are also unsuitable when you have significant limits on your available bandwidth, storage space and/or computing power.

详情

If the records you need to traverse are many, or if your result set is likely to be very large, it's time to consider working with a database dump: (link to the latest complete dump).

You'll find detailed documentation about all Wikimedia dumps on the "Data dumps" page on Meta and about Wikidata dumps in particular on the database download page. See also Flavored dumps above.

工具

- JsonDumpReader is a PHP library for reading dumps.

- At [1] you'll find a Go library for processing Wikipedia and Wikidata dumps.

- You can use wdumper to get partial custom RDF dumps.

本地查询服务

It's no small task to procure a Wikidata dump and implement the above tools for working with it, but you can take a further step. If you have the capacity and resources to do so, you can host your own instance of the Wikidata Query Service and query it as much as you like, out of contention with any others.

To set up your own query service, follow these instructions from the query service team, which include procuring your own local copy of the data. You may also find useful information in Adam Shorland's blog post on the topic.